This classifier classifies audio into seven instrumental categories: the sitar, the bansuri, the sarangi, the sarod, the tabla, the harmonium and Western music (a catch-all category for any piece of music which does not fit into the previous ones). This was the first of our projects which could truly be called ours – we were the ones who wrote its code, as well as the ones who proposed and then decided on its purpose. Like the genre classifier, its current version trains on embeddings provided by the YAMNet model – meaning that we let the much more advanced model process the data before feeding it to our own model.

A More Technical Perspective

Our database consists of around 1070 files, each 15 seconds long, with the files being harvested from YouTube. Each file consists of one prominent instrument being played, with no other accompaniments. However, while cutting the files the first time, we by mistake cut them in such a manner that each file consisted of the first fifteen seconds of its source clip. We did not realize this fatal mistake until afterwards, and it contributed greatly to the initial low performance of our model.

MFCC Instrument Classification

First of all, we extracted forty MFCC features from each file, which we then used to train our model. Our model had only one hidden layer of 100 neurons, as increasing the network density had no observable advantages. The model reported a training accuracy of 1 and a dev set accuracy of 1 but a test accuracy of 0.58 due to severe overfitting. One can see the breakdown of its performance below.

| Total | Sarangi | Sarod | Sitar | Bansuri | Tabla | Harmonium | |

| Sarangi | 20 | 10 | 1 | 1 | 1 | 3 | 4 |

| Sarod | 20 | 4 | 10 | 0 | 2 | 2 | 2 |

| Sitar | 22 | 0 | 0 | 9 | 7 | 5 | 1 |

| Bansuri | 18 | 3 | 2 | 0 | 6 | 7 | 0 |

| Tabla with Harmonium | 20 | 0 | 0 | 2 | 0 | 17 | 1 |

| Tabla with Sarangi | 17 | 0 | 0 | 0 | 0 | 16 | 1 |

| Harmonium | 19 | 0 | 0 | 1 | 0 | 7 | 11 |

In the table above, the labels on the left show the actual category, while the labels on the top show the amount which were labeled for each category by our model.

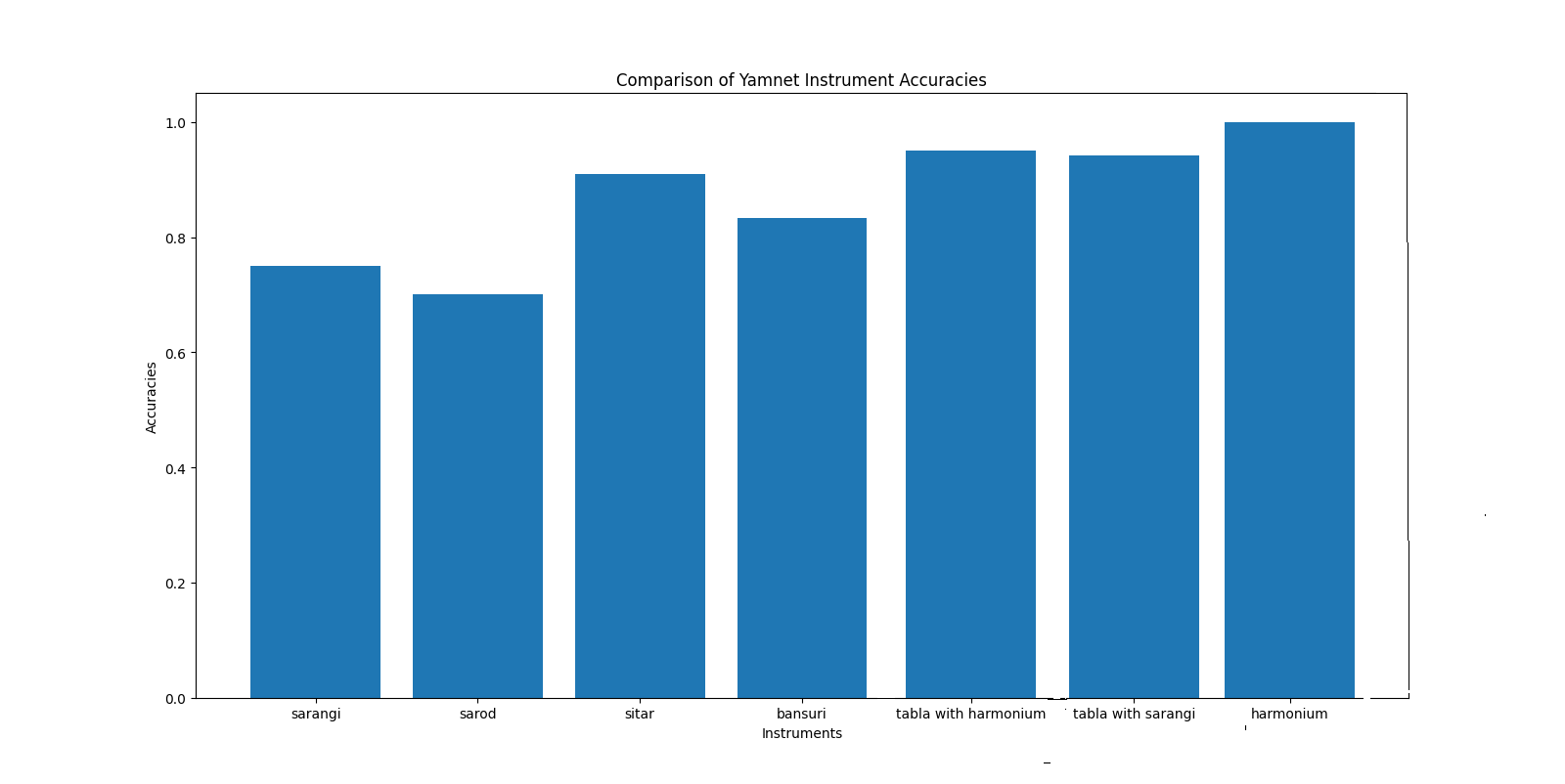

YAMNet Instrument Classification

We then used the YAMNet model instead, as it had worked quite well on the previous genre-classification problem. We used the same training database again, and modified our YAMNet classification code for this purpose. We then once more reported a training and dev accuracy of 1 and 1, but a test accuracy of 0.88. Our model had only one hidden layer with 512 neurons, but used the embeddings extracted by the YAMNet model to train. You can see a breakdown of its performance and how it compares to that of the MFCC based model’s below.

We then added the category of Western Music, which consisted only of the violin and piano solos. It reported an accuracy of 0.9 after this, alongside an accuracy of 1 for both the piano and tabla test cases. This was rather surprising to us, as we had expected the addition of another class to decrease the overall accuracy considerably after our experience to adding classes to our genre identifying model. Instead, it remained mostly the same, contradicting our expectations. This is a prime example of how we truly learned machine learning throughout our journey – by actually experimenting to test our assumptions and, more often than not, making mistakes and learning from them.

Save the Sitar is a website dedicated to promoting and preserving Pakistan’s classical music. Join our growing community to help further our cause!

Follow Save the Sitar!

Get new content delivered directly to your inbox.

you guys are doing great work. I want to buy a classic sitar for my daughter. Can you help

LikeLike

Hello!

We do not buy/sell instruments, but here is the contact number of Lahore-based sitar-maker Ziauddin’s son Kashan, who works with him at their shop: 03055672493. Please let us know if you have any other questions!

LikeLike